In an age dominated by technological advancements, the specter of artificial intelligence (AI) looms large over our collective consciousness. Yet, beneath the algorithmic façades lies a phenomenon that transcends the simple interaction of humans and machines—AI anthropomorphism. This intrinsic tendency to attribute human traits, emotions, and intentions to non-human entities is as old as humanity itself. As such, our use of AI is intrinsically colored by this profound habit. But what does this mean for our understanding of AI? The implications are as intricate as the very systems we create.

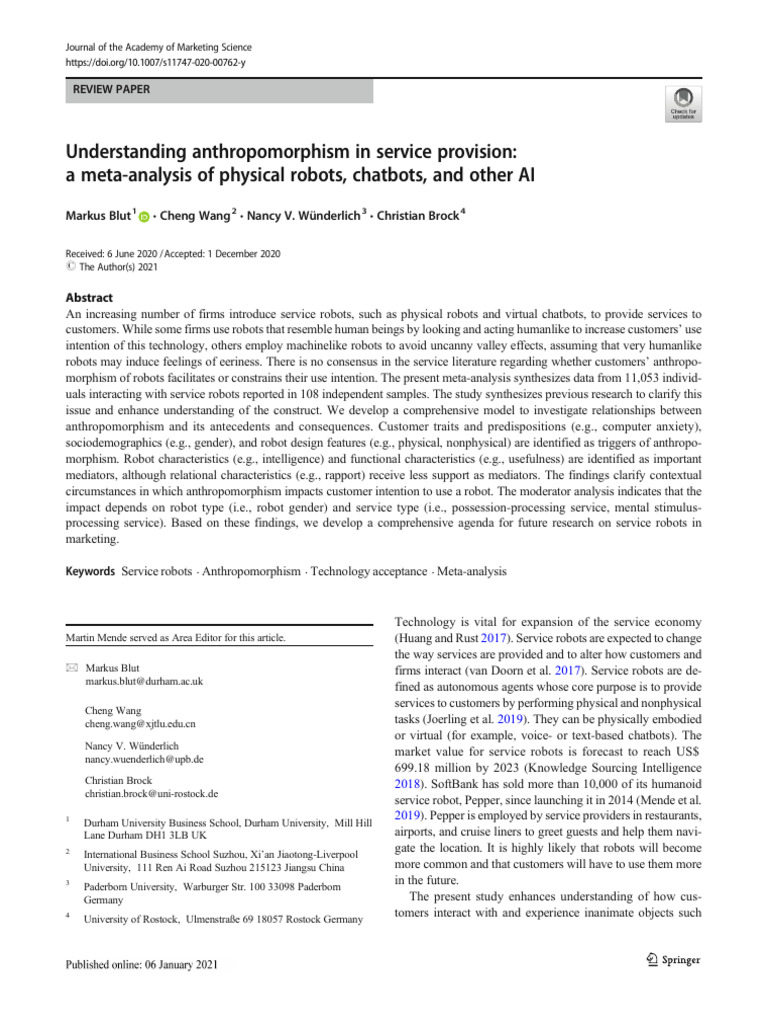

At its core, anthropomorphism arises from a deep-seated psychological impulse. Humans are naturally inclined to seek connection, often ascribing emotional characteristics to the world around them. Whether it’s the lovable cartoon characters of our childhood or the latest voice-activated assistant at home, we yearn for relatability. This act of humanization serves as a bridge, turning cold, metallic constructs into companions or confidants. The question that looms is whether this behavior aids or hinders our interaction with AI.

Imagine for a moment a sentient computer that responds to your requests not with a staccato of binary code, but with a soothing, empathetic tone. This relational dynamic can feel comforting. In doing so, we elevate technology beyond mere tools of convenience into the realm of companionship. Yet, herein lies the duality: while anthropomorphism fosters connection, it can also deep-six the practicality often required in human-AI interactions.

Consider the role of anthropomorphism in the realm of decision-making, particularly in AI applications such as autonomous vehicles or healthcare systems. When we envision an autonomous car—a sophisticated amalgamation of sensors and algorithms—as having “intelligence” akin to that of a human driver, we may unintentionally overlook critical nuances. The alluring metaphor of the “smart” car beckons us to trust its judgment, yet this trust must be tempered with the understanding that its “thought process” is devoid of human instinct, empathy, and, crucially, moral reasoning.

In healthcare applications, we entrust AI to make pivotal diagnostic decisions or suggest treatment plans. When a patient interacts with a virtual healthcare assistant, the language embedded into these systems often evokes empathy—suggesting care, concern, and hope. While such anthropomorphism calms the anxieties prevalent in medical scenarios, it may lead to unrealistic expectations. The patient may feel a false sense of security, believing that the AI is more akin to a guardian angel than a complex algorithm crunching vast datasets.

Moreover, the enchantment of anthropomorphic AI extends into realms like entertainment, where characters designed to emulate human emotions capture our hearts. Yet, it is this same endearing quality that complicates our recognition of AI’s limitations. When we cheer for a robot in a movie as it experiences love or loss, we risk conflating its artificial mimicry of emotion with genuine sentiment. This blurring lines between reality and fascination presents a formidable challenge in nurturing an accurate understanding of what AI can and cannot do.

Notably, anthropomorphism can engender ethical dilemmas. As we increasingly imbue AI with human-like attributes, we wrestle with the moral implications of granting machines roles traditionally held by humans. Should an AI be allowed to act as a parental figure or an emotional support system? The ramifications of such interactions could range from the trivial to the tumultuous. The risks escalate—how do we draw lines when the technology itself appears so relatable? The question of culpability surfaces: if an AI wreaks havoc while acting on its programmed algorithms, are we partially to blame for humanizing it?

To navigate this quagmire, we must instigate a cultural shift in our understanding of AI. First and foremost, there must be an urgent call to critique our inherent anthropomorphism. Embracing a more analytical mindset can mitigate the emotional softening that often clouds judgment. By fostering discussions on the limitations of AI—from its lack of genuine emotion to the persistence of biases in its programming—we arm ourselves with a clearer lens through which we can view our interactions with technology.

Furthermore, integrating more rigorous training and education surrounding AI in our schools and communities could demystify the allure of anthropomorphism. Developing tools that empower individuals to engage with technology thoughtfully, rather than sentimentally, could dismantle some of the allure that clouds our perception. Acknowledging the roots of human anxiety surrounding technology is paramount. By confronting our relational tendencies, we may temper the idealization of AI and ground our engagements in reality.

In conclusion, the phenomenon of AI anthropomorphism reveals an intriguing paradox. It encapsulates the human longing for companionship and understanding while simultaneously posing challenges to our capacity for realistic engagement with technology. As we continue to inhabit a world increasingly woven with AI, reflecting critically on our tendencies to humanize these constructs is crucial. The enchantment of anthropomorphism may provide a tantalizing allure, yet it is our responsibility to temper it with discernment and wisdom, ensuring that in our quest for connection, we do not lose sight of the very nature of the technology we embrace.